Notice

Recent Posts

Recent Comments

Link

| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | ||||||

| 2 | 3 | 4 | 5 | 6 | 7 | 8 |

| 9 | 10 | 11 | 12 | 13 | 14 | 15 |

| 16 | 17 | 18 | 19 | 20 | 21 | 22 |

| 23 | 24 | 25 | 26 | 27 | 28 | 29 |

| 30 |

Tags

- ML

- GPT

- 프롬프트 엔지니어링

- feature engineering

- 언어모델

- nlp

- prompt

- supervised ml

- 딥러닝

- Supervised Learning

- llama

- neural network

- Scikitlearn

- learning algorithms

- Andrew Ng

- 인공지능

- LLM

- ChatGPT

- feature scaling

- Regression

- bingai

- 인공신경망

- AI 트렌드

- 챗지피티

- Deep Learning

- Unsupervised Learning

- AI

- Machine Learning

- 머신러닝

- coursera

Archives

- Today

- Total

My Progress

[Supervised ML] Feature Engineering - 7 본문

반응형

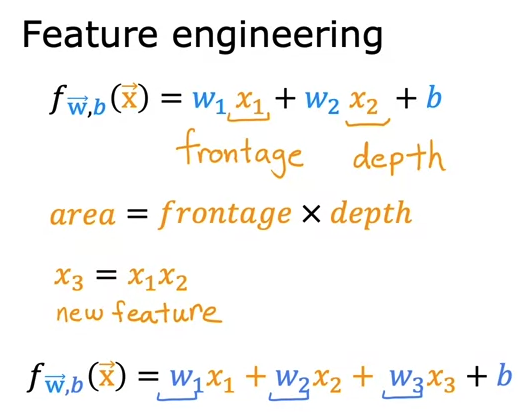

1. What is Feature Engineering?

Using intuition to design new features, by transforming or combinging original features.

Example) House price.

If we are given the dimension of the house such as length and width, we can create a new variable to include for price prediction of a house. For example, we create a area variable with length and width.

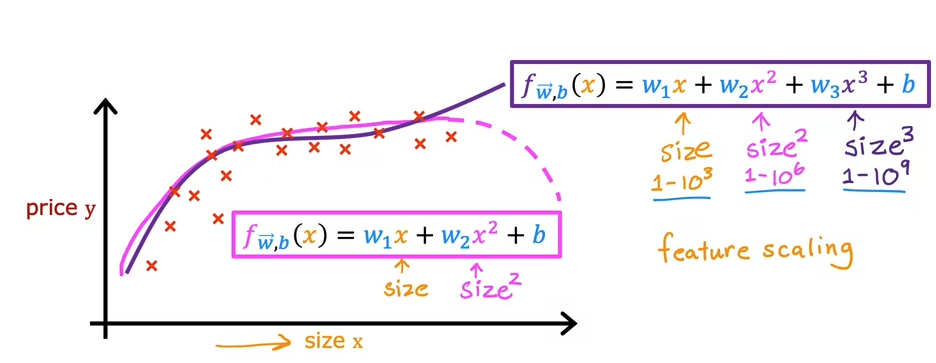

2. Feature engineering in Polynomial Regression

We may want to create a curve line instead of a linear line.

We can use feature engineering by multiplying x by exponents. But now x has a different scale of value than the original x. This is where feature scaling comes to play its job. We need to rescale the data for better gradient descent performance.

Other than x^2, x^3, there are other choices of feature.

반응형

'AI > ML Specialization' 카테고리의 다른 글

| [Supervised ML] Cost function/Gradient descent for logistic regression - 9 (0) | 2023.07.31 |

|---|---|

| [Supervised ML] Classification with logistic regression - 8 (1) | 2023.07.30 |

| [Supervised ML] Gradient descent / Learning Rate - 6 (0) | 2023.07.28 |

| [Supervised ML] Feature scaling - 5 (0) | 2023.07.28 |

| [Supervised ML] Multiple linear regression - 4 (0) | 2023.07.28 |