| 일 | 월 | 화 | 수 | 목 | 금 | 토 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | 6 | |

| 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| 14 | 15 | 16 | 17 | 18 | 19 | 20 |

| 21 | 22 | 23 | 24 | 25 | 26 | 27 |

| 28 | 29 | 30 | 31 |

- LLM

- 딥러닝

- Unsupervised Learning

- AI 트렌드

- bingai

- AI

- Scikitlearn

- feature scaling

- 챗지피티

- feature engineering

- coursera

- GPT

- learning algorithms

- 머신러닝

- Machine Learning

- 언어모델

- 인공지능

- prompt

- Regression

- neural network

- Supervised Learning

- 인공신경망

- Deep Learning

- ChatGPT

- supervised ml

- Andrew Ng

- llama

- 프롬프트 엔지니어링

- ML

- nlp

- Today

- Total

My Progress

[Advanced Learning Algorithms] Neural Network - 1 본문

1. Neurons and the brain

Origins: Algorithms that try to mimic the brain

Development RoadMap:

speech -> images -> text(NLP) -> ...

Since the development of Large Neural Network and GPU, we could perform many different task using the "Big Data".

2. Example - Demand Prediction

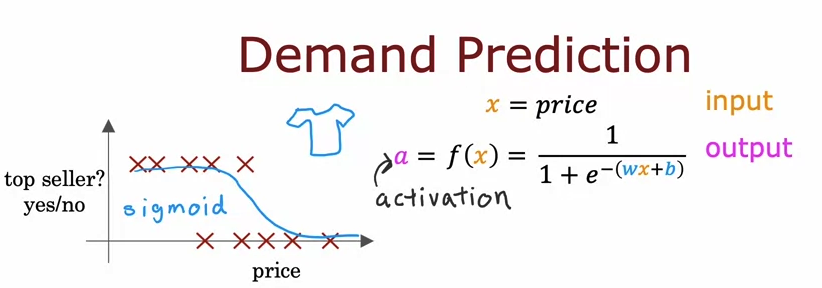

We use sigmoid function to solve the classification problem. We called the function we use f(x). In the neural network, we call a function, activation.

This can be thought as a neuron, which takes in a input and give output through neuron's function.

More complex example)

Let's say we have four different features: Price, shipping cost, marketing, material. We want to predict the probability of being a top seller. For prediction, we want to take account of affordability, awareness, and perceived quality. We predict these estimate using our four different features.

We call this estimate, an activation layer, the output, a output layer, and input, a input layer.

Also, we call these actiavation layer, a hidden layer. We can think of this hidden layer as feature engineering.

3. Neural Network Layer

3-1 Neural Network layer Intuition

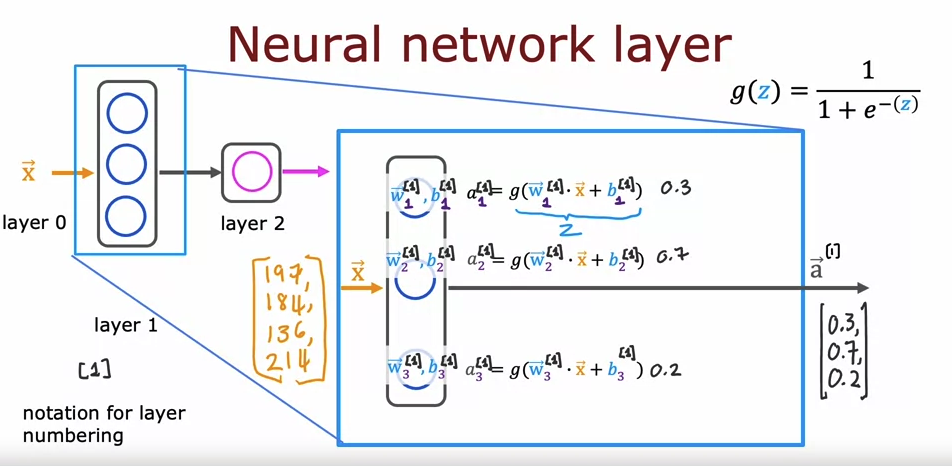

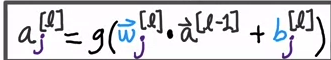

Each neuron has each function and input. After the computation of an input through each function of a neuron in the layer, we get output, which will use for another layer.

j represents the unit in each layer, L represents the layer.

3-2 Building a Neural Network

#Create layers, then make a model

#Way 1

layer_1 = Dense()units = 3, activation = "sigmoid")

layer_2 = Dense(units = 1, activation "sigmoid")

model = Sequential([layer_1, layer_2])

#Way2

model = Sequential([Dense(units = 3, activation = "sigmoid"),

[Dense(units = 1, activation = "sigmoid")])

#Training examples

x = np.array([[200.0, 170.0],

[230.0, 180.0],

.

.])

y = np.array([1,0,0,1])

model.compile(..)

model.fit(x,y)

#x_new is the inference on the new dataset

model.predict(x_new)

4. Data in Tensorflow

To make a model in tensorflow or numpy or other languages, we have to know how data is represented in a specific language.

We are going to specifically look at how data is represented in Tensorflow.

x = np.array[[200.0, 17.0]]

A way to represent the matrix in python is a bracket. An example above has two brackets instead of one.

This double brackets is used to represent 2 x 2 matrix. If it were to have only one braket, it would be a 1D vector.

For other examples,

1 2 3

4 5 6

This 2 x 3 matrix is can be represented as the example below

x = np.array([1, 2, 3],

[4, 5, 6])

Also,

[200 17] of 1 x 2 matrix -> x = np.array([[200, 17]])

200

17 of 2 x 1 matrix -> x = np.array([[200]

[17]])

x= np.array([200, 17]) would be a 1D vector.

'AI > ML Specialization' 카테고리의 다른 글

| [Advanced Learning Algorithms] Diagnostics / Bias & Variance - 3 (0) | 2024.01.16 |

|---|---|

| [Advanced Learning Algorithms] Neural Network Training - 2 (0) | 2024.01.16 |

| [Supervised ML] Review - 11 (0) | 2023.08.03 |

| [Supervised ML] The problem of overfitting / Regularization - 10 (0) | 2023.08.01 |

| [Supervised ML] Cost function/Gradient descent for logistic regression - 9 (0) | 2023.07.31 |